Cohere's Aya Vision: Open AI Model for Multilingual Understanding

Cohere For AI, the research arm of AI startup Cohere, just dropped a bombshell: Aya Vision, a multimodal "open" AI model they're touting as best-in-class. But what does that actually mean for you?

Essentially, Aya Vision can handle a bunch of cool tasks. Think writing captions for images, answering questions about those photos, translating text like a pro, and even generating summaries in 23 major languages! Cohere is even making it available for free via WhatsApp, aiming to democratize access to cutting-edge AI research.

Bridging the Language Gap

Cohere highlights a major problem in the AI world: language bias. Models often perform much better in English, leaving other languages behind, especially in tasks involving both images and text. Aya Vision is specifically designed to tackle this gap.

Two Flavors of Aya Vision

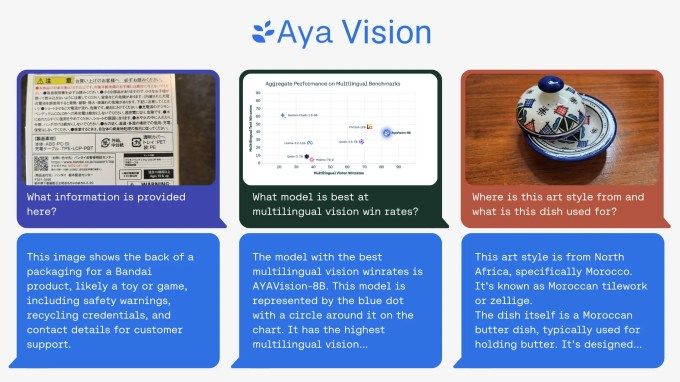

You've got two options here: Aya Vision 32B and Aya Vision 8B. The 32B version is the heavyweight, apparently outperforming models twice its size, including Meta's Llama-3 90B Vision, on certain visual understanding tests. The 8B version also holds its own, even surpassing models ten times its size in some evaluations.

Both models are available on Hugging Face under a Creative Commons 4.0 license, but there's a catch: they're not for commercial use.

Synthetic Data to the Rescue?

Here's where things get interesting. Aya Vision was trained using a mix of English datasets, which were then translated and used to create synthetic annotations. These annotations act as tags or labels, helping the model understand and interpret data during training. Think of it like labeling objects in a photo so the AI knows what it's looking at.

Using synthetic data is becoming increasingly common as the supply of real-world data dwindles. Even OpenAI is jumping on the bandwagon. Gartner estimates that a whopping 60% of the data used for AI projects last year was synthetically generated.

Cohere claims that training Aya Vision with synthetic annotations allowed them to achieve competitive performance with fewer resources. This focus on efficiency is crucial, especially for researchers with limited computing power.

A New Benchmark: AyaVisionBench

Cohere isn't just releasing a model; they're also introducing AyaVisionBench, a new benchmark suite for evaluating a model's skills in vision-language tasks. This includes things like spotting differences between images and converting screenshots into code.

The AI industry is facing an "evaluation crisis" because current benchmarks often don't accurately reflect how well a model performs in real-world scenarios. Cohere hopes AyaVisionBench will address this by providing a more comprehensive way to assess cross-lingual and multimodal understanding.

The Goal: Better, More Accessible AI

Ultimately, Cohere's goal is to create AI that's not only powerful but also accessible to a wider range of researchers and users. By focusing on efficiency, multilingual capabilities, and robust evaluation, Aya Vision could be a significant step in the right direction.

“[T]he dataset serves as a robust benchmark for evaluating vision-language models in multilingual and real-world settings,” Cohere researchers wrote in a post on Hugging Face. “We make this evaluation set available to the research community to push forward multilingual multimodal evaluations.”

1 Image of Aya Vision AI:

Source: TechCrunch