Character AI Adds Parental Supervision Tools for Teen Safety

Character AI, the platform known for allowing users to create and interact with AI characters, is taking steps to enhance safety for its younger users. Responding to a series of lawsuits and concerns about the protection of underage individuals, the company has announced the rollout of new parental supervision tools.

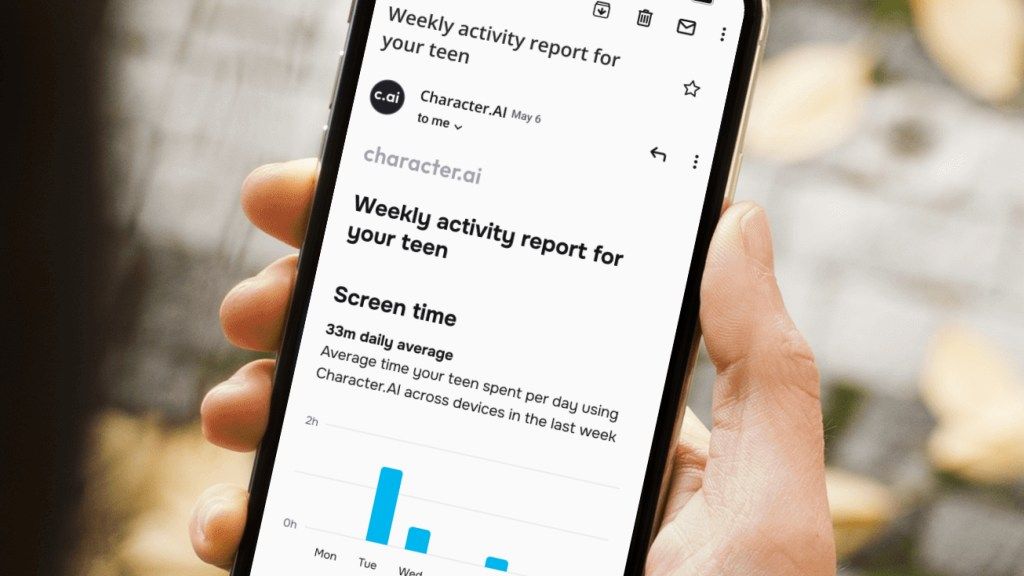

These tools aim to provide parents and guardians with insights into their teens' activity on the platform. A key feature is a weekly email summary that details the average time spent on the app and website, the duration of conversations with specific AI characters, and a list of the most frequently interacted-with characters during the week.

Character AI emphasizes that this data is intended to give parents a better understanding of their teens' engagement habits on the platform, without granting them direct access to the content of the chats themselves.

Existing Safety Measures

This isn't Character AI's first foray into safety enhancements. Last year, the company implemented several measures, including a dedicated AI model for users under 18, notifications about time spent on the app, and disclaimers reminding users that they are interacting with AI. Additionally, Character AI has worked to block sensitive content by creating new classifiers specifically for teen users.

The move towards greater parental supervision comes after Character AI faced a lawsuit earlier this year alleging the company's involvement in a teen's suicide. The company has filed a motion to dismiss the lawsuit.

Source: TechCrunch